Throughout 2009 you’ll probably hear a lot of talk about the semantic web, aka “Web 3.0”.

The semantic wave embraces previous stages of internet growth. The first stage, Web 1.0, was about connecting information and getting on the net. Web 2.0 is about connecting people – the web of social networks and participation. This was all the rage over the past few years and is now part of mainstream internet culture.

The emerging stage, Web 3.0, is starting now. It is about connecting knowledge, and putting these to work in ways that make our experience of the internet more relevant, useful, and enjoyable. Google have recently starting making the power of this technology available.

ReadWriteWeb comments:

“…In what appears to us to be a new addition to many Google search results pages, queries about birth dates, family connections and other information are now being responded to with explicitly semantic structured information. Who is Bill Clinton’s wife? What’s the capital city of Oregon? What is Britney Spears’ mother’s name? The answers to these and other factual questions are now displayed above natural search results in Google…”

Source: Marshall Kirkpatrick, ReadWriteWeb, January 6, 2009

Web 4.0 will come later. It is about connecting people and things (internet-enabled objects) so they reason and communicate together.

Project10X illustrates the evolution of the Internet like this over the next decade:

Source: Nova Spivak, Radar Networks; John Breslin, DERI; & Mills Davis, Project10X

Getting Your Head Around the ‘Semantic Web’

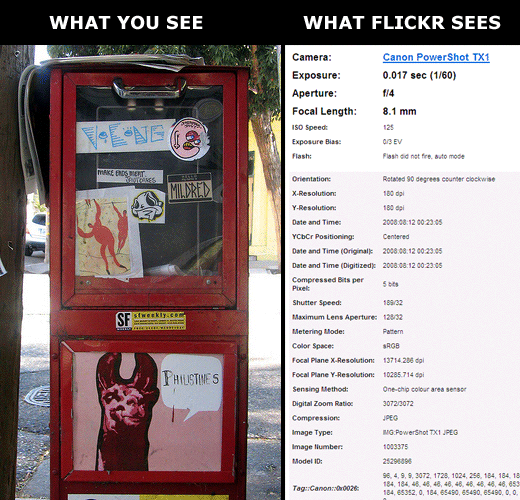

The core idea behind the semantic web, is a web where “things” (like a JPG image, for example) can be read and understood by computers. If you dig around Flickr you’ll see evidence of this already in action.

If you have a modern digital camera it’s not only capturing the visual image. Almost all new digital cameras save JPEG (jpg) files with EXIF (Exchangeable Image File) data. Camera settings and scene information are recorded by the camera into the image file. Examples of stored information are shutter speed, date and time, focal length, exposure compensation, metering pattern and if a flash was used.

Visit my Flickr account to see the huge range of EXIF data captured when I took this photo on a visit to San Francisco last year.

Using this comprehensive set of data Flickr allows you to look at all other photos taken with the same type of camera as mine plus photos taken by other people on the same day. If my camera was GPS enabled then they could also display photos taken on the same street!

By using publicly available data like this the semantic web can better understand our search queries and how people, objects and information are linked with each other. It opens up a new world of possibilities.

To learn more about semantic technology opportunities the Project10X web site has a heady, but interesting whitepaper on the topic.

You must be logged in to post a comment.